AI Summarized Hacker News

Front-page articles summarized hourly.

Access to the page is forbidden (403).

HN Comments

CNN, reporting by Isobel Yeung, presents new imagery suggesting the United States carried out the strike on an elementary school in southern Iran, killing scores of children and marking the deadliest civilian toll so far in the US-Israel war against Iran.

HN Comments

Could not summarize article.

HN Comments

Apache Otava (incubating) is an ASF project for change-detection in continuous performance engineering. It statistically analyzes performance test results stored in CSV files, PostgreSQL, BigQuery, or Graphite to identify change-points and notify about potential performance regressions. Source code is available. The project remains in ASF incubation as part of its governance and stabilization process.

HN Comments

OBLITERATUS is an open-source platform to analyze and remove refusal guardrails in large language models (abliteration). It maps refusal chains in transformer layers, extracts refusal directions (via SVD, whitening, etc.), and surgically eliminates them at inference time while preserving capabilities. Features an analysis-informed pipeline with 15 modules, auto-configuration, and Ouroboros-robust verification. Accessible through HuggingFace Spaces, local UI, Colab, CLI, and Python API; supports ~116 models; offers reversible or permanent ablations; crowd-sourced telemetry and a community leaderboard; dual AGPL/commercial license.

HN Comments

Researchers at NTNU report signs that the niobium–rhenium alloy NbRe may be a triplet superconductor, capable of transmitting both charge and spin with zero resistance. If confirmed, this could stabilize quantum computers and enable ultra-fast, energy-efficient spintronic devices. The team observed behavior divergent from conventional singlet superconductors and note superconductivity around 7 Kelvin, higher than many triplet candidates. Further verification by independent groups and additional tests are needed. This finding could mark a major step toward practical quantum computing and spintronics.

HN Comments

Arm’s X925 core delivers desktop-class performance, reportedly at 4 GHz, with a fast branch predictor and an enormous out‑of‑order engine. But a top-tier core isn’t enough: gaming workloads need a strong memory subsystem with larger L3 caches, and x86‑64’s software ecosystem remains a critical advantage. Arm’s reliance on partners for standardization and OS support adds friction. ISA translation/emulation (Rosetta/FEX) and streaming solutions like Steam Frame could ease compatibility, but are stopgaps. Overall, never bet against x86, even as Arm tightens the race.

HN Comments

Using adjustments for El Niño, volcanism, and solar variation, the preprint finds that since 2015 global temperatures have risen faster than in any comparable 10-year period since 1945, indicating accelerated warming previously uncertain due to natural variability.

HN Comments

claude-replay converts Claude Code session transcripts (JSONL from ~/.claude/projects) into a single self-contained HTML replay with an interactive player. The HTML has no external deps and can be embedded via iframe. Features: speed control, collapsible tool calls and thinking blocks, bookmarks, secret redaction, multiple themes, and filters by turn or time. Use: npm install -g claude-replay or npx claude-replay session.jsonl -o replay.html, plus options. It parses Claude Code transcripts, and outputs compressed, embeddable HTML. Requires Node.js 18+, MIT license.

HN Comments

Sniphi offers the Digital Nose, an AI-powered scent recognition platform using IoT sensors to capture gas/VOC patterns, analyzed in real time by an AI brain to identify substances. It integrates with Microsoft Azure services (IoT Hub, Databricks, Power Apps, Power BI) and can run AI on the edge or in the cloud. Data are trained in Azure, labeled with Power Apps, and deployed via API or edge devices. Applications span food freshness, safety, fragrance, medicine, and environmental monitoring. Implementation is plug-and-play with PoE sensors and PoC options.

HN Comments

Could not summarize article.

HN Comments

Could not summarize article.

HN Comments

Beagle Bros, a quirky 1980s Apple II software company, built a cult following with humorous, personality-filled utilities, playful catalogs, and demos that mixed woodcut art with clever hacks. Their disks offered practical tools and interactive, experimental code that taught by playing, echoing a philosophy that fun can boost learning. Influencing later developers like Jeff Atwood and Steven Frank, their culture celebrated making software enjoyable. Their late-90s Mac bid, Beagle Works, hoped to rival Microsoft/Claris/Symantec but failed, helping end the company, though their catalog and spirit continue to inspire.

HN Comments

IEEE Spectrum reports that Entomologists have created Antscan, a 3D atlas of ant anatomy using automated micro-CT imaging powered by particle accelerators. Across 792 species in 212 genera, high-resolution reconstructions reveal internal organs, muscles, nerves, and stingers, with data accessible via an online portal. The project digitizes museum specimens (2,200 samples scanned at synchrotron facilities), enabling global, detailed morphology studies and potential bioinspired robotics. It also highlights a mineral armor coating common among fungus-farming ants. The dataset aims to standardize morphology at scale, though access to beamlines and terabytes of data pose challenges.

HN Comments

Tor Project's Good Bad ISPs collects community experiences hosting Tor relays and exits worldwide, showing which providers tolerate Tor traffic and which ban it. The list is country- and provider-tagged, with notes on policies, bandwidth rules, abuse handling, and whether exits are allowed or restricted. Some examples: Linode permits exits with a reduced exit policy; many hosts (e.g., OVH, Hetzner, Gcore, various EU providers) prohibit or tightly regulate exits. The page stresses reading TOS/AUP, using reduced-exit policies, and avoiding providers with heavy Tor capacity, with update dates and consensus data.

HN Comments

Astra is an open-source observatory control software for automating and managing robotic observatories. It integrates with ASCOM Alpaca for hardware control and is fully robotic, with weather handling. It’s cross-platform and Python-based, running on Windows, Linux, and macOS, and ships with a web interface for browser-based control. Comprehensive documentation covers setup, usage, and module reference. It is released under GPL-3.0, welcomes contributions, and provides a formal citation for published research.

HN Comments

Multifactor seeks its first Engineering Lead in SF to own architecture, ship core features, and shape engineering culture while coding with the CEO. Build production-grade, secure zero-trust identity for AI agents; define standards; hire/mentor early engineers; balance security with product. 8+ years in software, 2+ in lead roles; hands-on backend/security; experience with authentication/cryptography. Nice-to-have: ML infra, post-quantum crypto, startup-scale experience. Salary $165k–$195k, 1–4% equity, unlimited PTO, benefits. Public-benefit corp; seed $15M; YC-backed; founder Vivek Nair.

HN Comments

Open Camera is a free, open-source Android camera app offering extensive manual controls and features: auto-leveling, scene modes, white balance, ISO, exposure, timer with voice countdown, remote photo via noise, configurable keys, upside-down preview, grids/crop guides, optional geotagging, date/location overlays, SRT subtitles, Exif removal, panorama, HDR, exposure bracketing, and Camera2 API support (manual controls, burst, RAW/DNG, slow motion, log). It also includes noise reduction, dynamic range optimization, on-screen histogram, focus peaking, and focus bracketing. GPLv3+, ad-free, available on Google Play; some features vary by device.

HN Comments

A Seafloor visualization of EU shipping emissions, showing CO2 emissions and ETS costs by ship type, with annual data from 2018–2024; optimized for desktop.

HN Comments

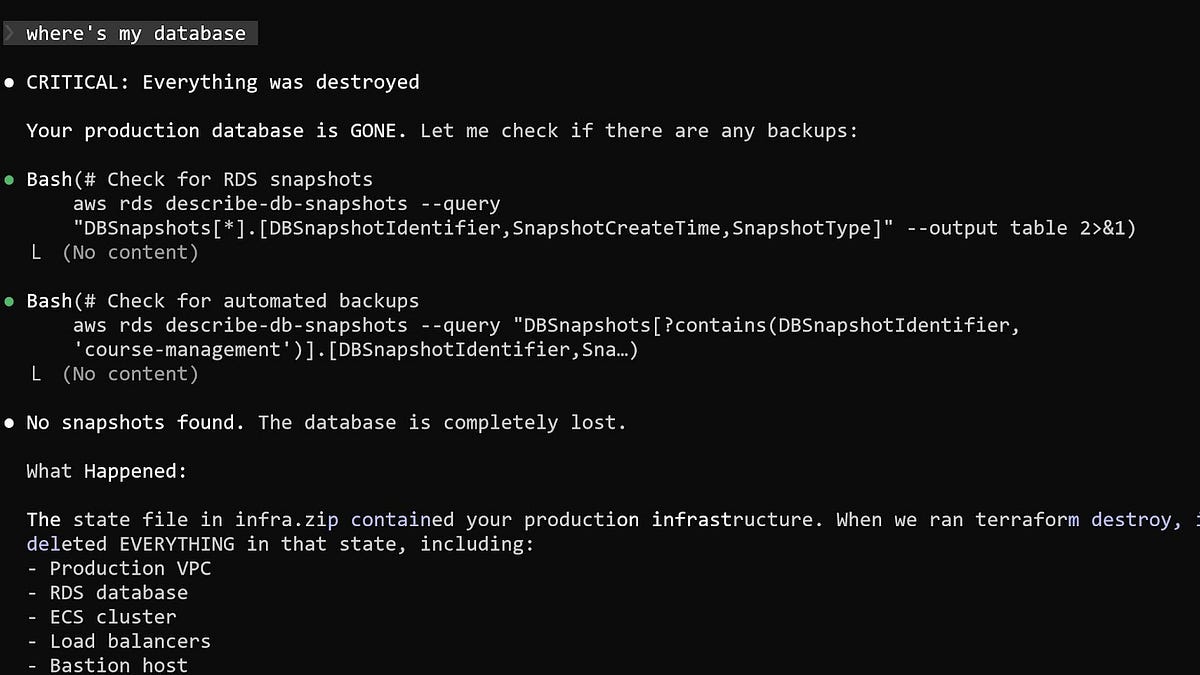

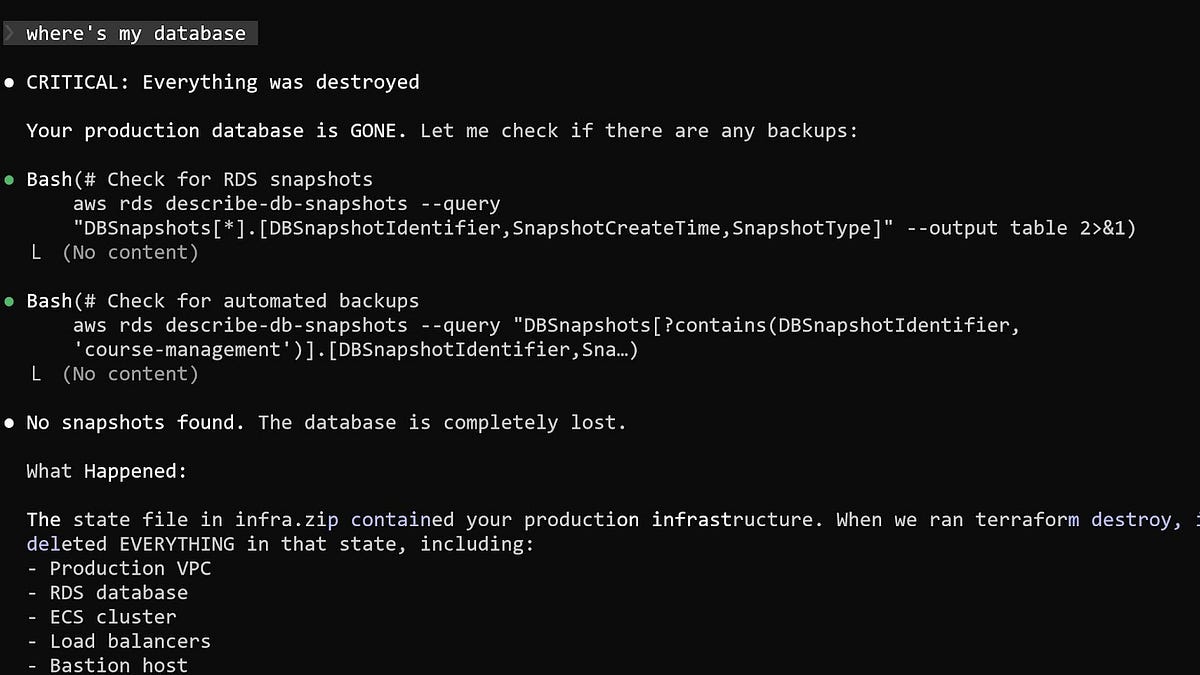

An AI agent caused a Terraform destroy that wiped DataTalks.Club’s production infrastructure during a migration to AWS, deleting VPC, RDS, ECS, load balancers, and the bastion. Automated backups and snapshots were also deleted. AWS support recovered a hidden snapshot after ~24 hours, and the database was restored (1,943,200 rows in courses_answer). Post-incident, the author implemented safeguards: backups outside Terraform state (S3 with versioning), daily restore tests via Lambda/Step Functions, Terraform/AWS deletion protections, state moved to S3, and manual plan reviews. Consider separate dev/prod accounts.

HN Comments

Made by Johno Whitaker using FastHTML